Online ASR

Online ASR¶

Warning

In June 2024, IT management decided to stop the Online ASR Service, please see RCS-ICT Steering committee Metting - Summary. In case you would like to have such a service, please contact IT engagement lead by Simone Campana.

Live ASR works using an RTMP stream so far. Therefore any software that could send an RTMP stream could benefit of CERN Live ASR.

Nowadays, our use case is based on Zoom desktop app. So anything you could hold on Zoom can be sent to CERN ASR so one gets its transcripts either on Zoom itself, via its REST API, or on our Web interface. The major difference between both ways is that the former has a latency of about 4 secs due to the way the Zoom REST API works, while the latter has a latency of approximately 900ms.

So far the service has been used successfully in a number of events (about 300 hours of production live streaming):

- Atlas week on February 12th to 16th, 2024.

- CMS Plenaries on December 4th to 8th, 2023.

- Atlas event on 7th November 2023.

- Atlas week on October 16th to 20th, 2023.

- LHCP 2023 on May 22nd to 26th, 2023.

- LHCP 2024 on June 3rd to 7th, 2024.

- FCC 2024 on June 10th to 14th, 2024.

Tech bits¶

Online transcription/translation technologies are the state-of-the-art branch of NLP (Natural Language Processing) technologies. On top of the complexity to evaluate accents, jargon, audio quality and language specifics the inference is working against the clock as transcripts shall be provided below 1 second latency to be able to integrate such a system with other services like Webcast, AI chatbot(s), Phone over IP, etc.

CERN ASR Live is using an autotrained model with about 9000 hours of CERN acoustics and also a language model extracted from analysis of about half million documents from CDS and CERN news. This hybrid approach used by our contractor, MLLP - Machine Learning and Language Processing, allow us to get WER's better than other systems like Whisper. Though Whisper cant be used for live ASR, it's one of the free top model for offline captioning system nowadays. Despite our model is tuned for Live ASR, and a comparition of a live asr system towards an offline is not fair for the former, as latency plays a big role on inference, herewith we present a comparative table at this date 1st August 2024:

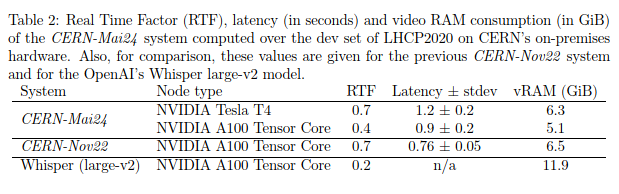

Quoting the deliverable from the MLLP, research institution that was developing the models for CERN use:

"It should be highlighted that the CERN-Mai24 system is both offline and streaming ASR ready. Table 2 provides Real Time Factor (RTF) figures to measure off-line ASR speed, latency figures (in seconds, accompanied by the observed standard deviation) to assess the delay in which the system is delivering live transcriptions under streaming conditions, and maximum video RAM (GPU memory) consumption. These figures have been computed by sequentially transcribing the development set of LHCP2020 (5.8 hours) in the two types of computing nodes featured by the CERN’s cluster: those featuring a NVIDIA Tesla T4 GPU, and those featuring a NVIDIA A100 Tensor Core GPU. Also, for comparison, these values have been computed for the previous CERN-Nov22 system, and for the OpenAI’s Whisper large-v2 model. Note that this latter is not capable to work under streaming conditions.

It is remarkable to see a streaming-ready ASR system such as CERN-Mai24 superseding in quality an offline ASR system, especially considering that the latter is trained with two orders of magnitude more data (680K hours for Whisper large-v2 vs 9K hours of CERN-Mai24 ). Finally, it must be concluded that in-domain data exploitation turns out to be key in order to outperform large pretrained models on very specific domains such as that of the CERN on particle physics."

Further details can be found at D3.6: Final development, deployment and testing of the Solution.

How to use the service¶

In order to use the service you account should be granted access to Live ASR services, please open an SNOW ticket if not the case.

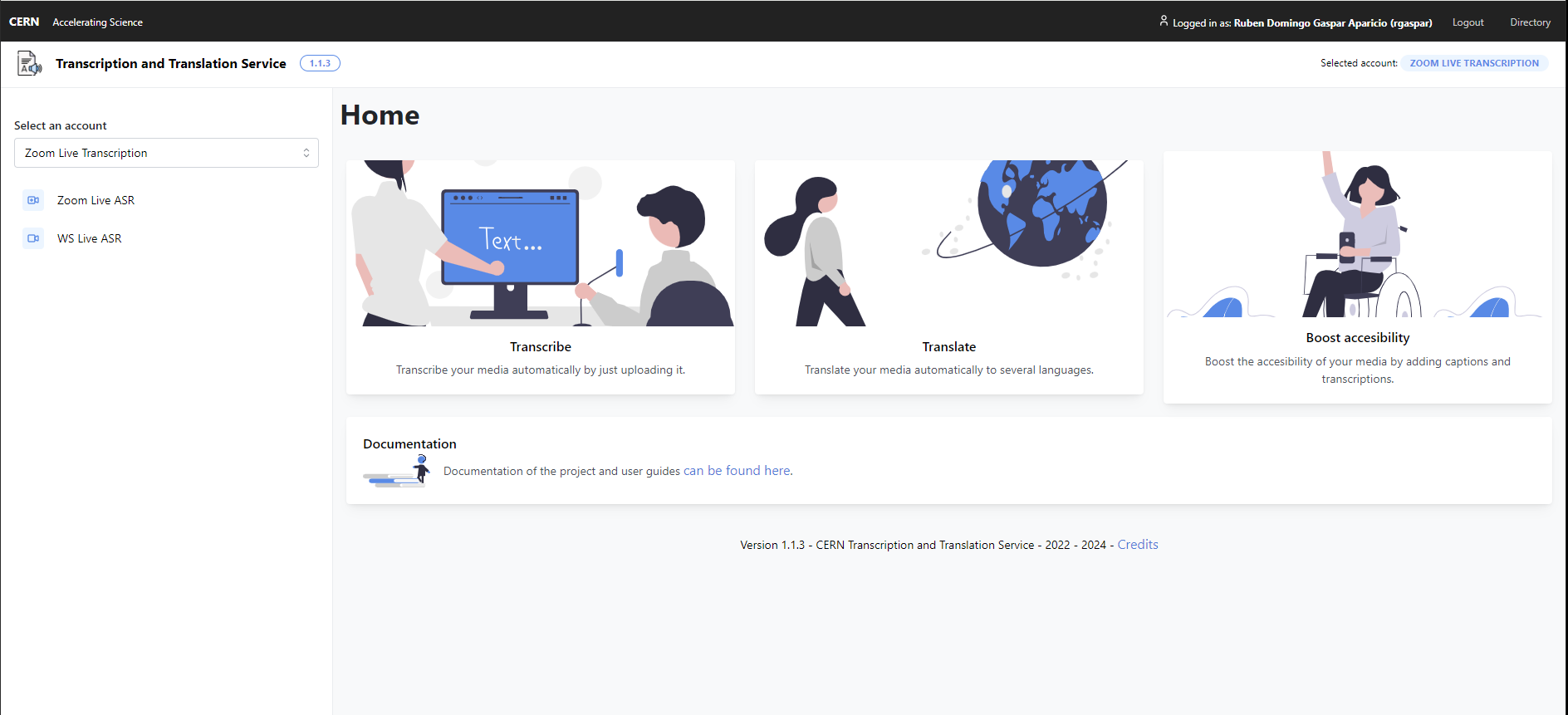

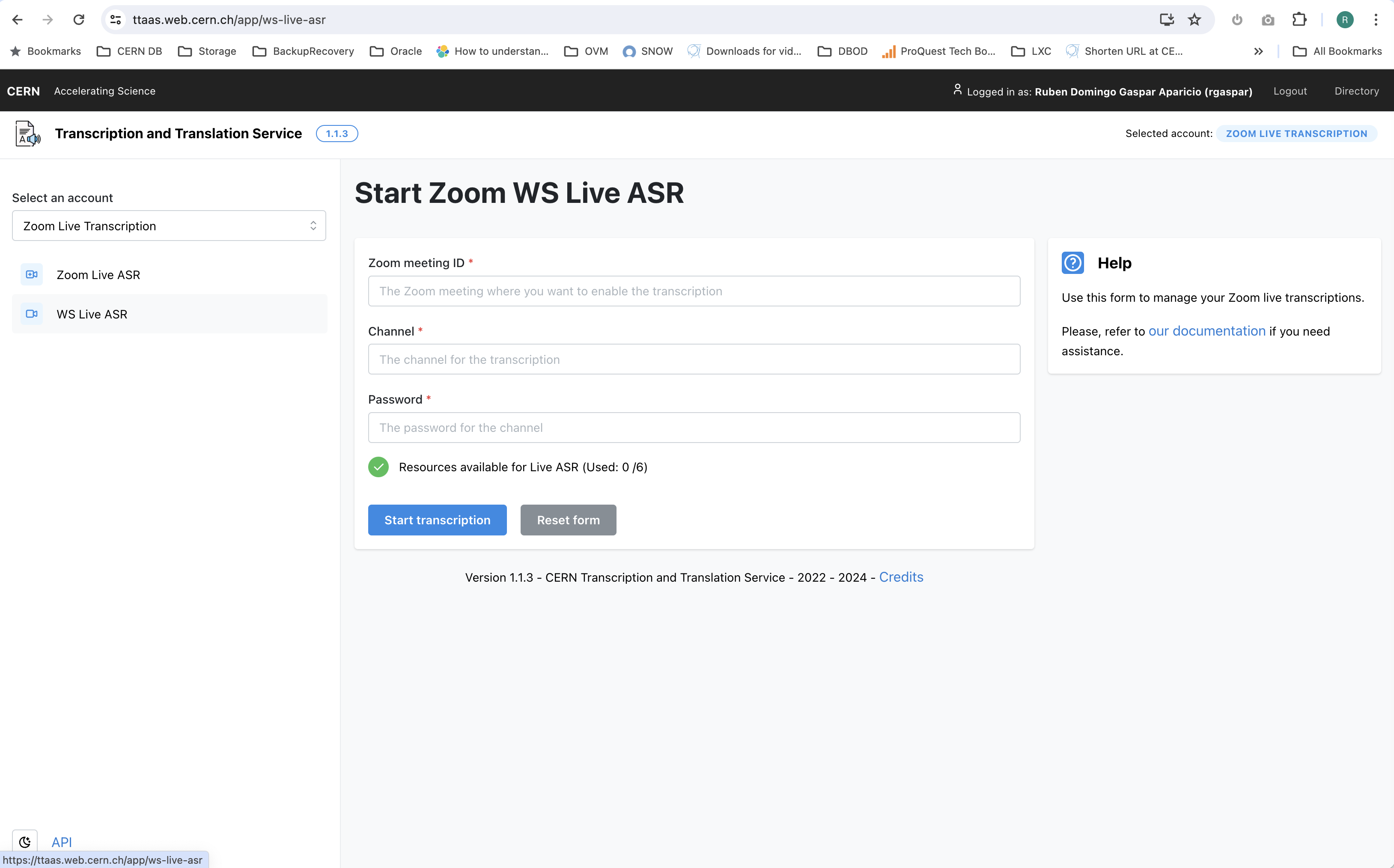

Log in into TTaaS website and select Zoom Live Transcription. You will find two options:

- Zoom Live ASR It uses Zoom app to display captions, it has a latency of about 4 secs

- WS Live ASR It uses WebSockets to display captions on a browser, it has a latency of less than a 1 sec.

Zoom Live ASR¶

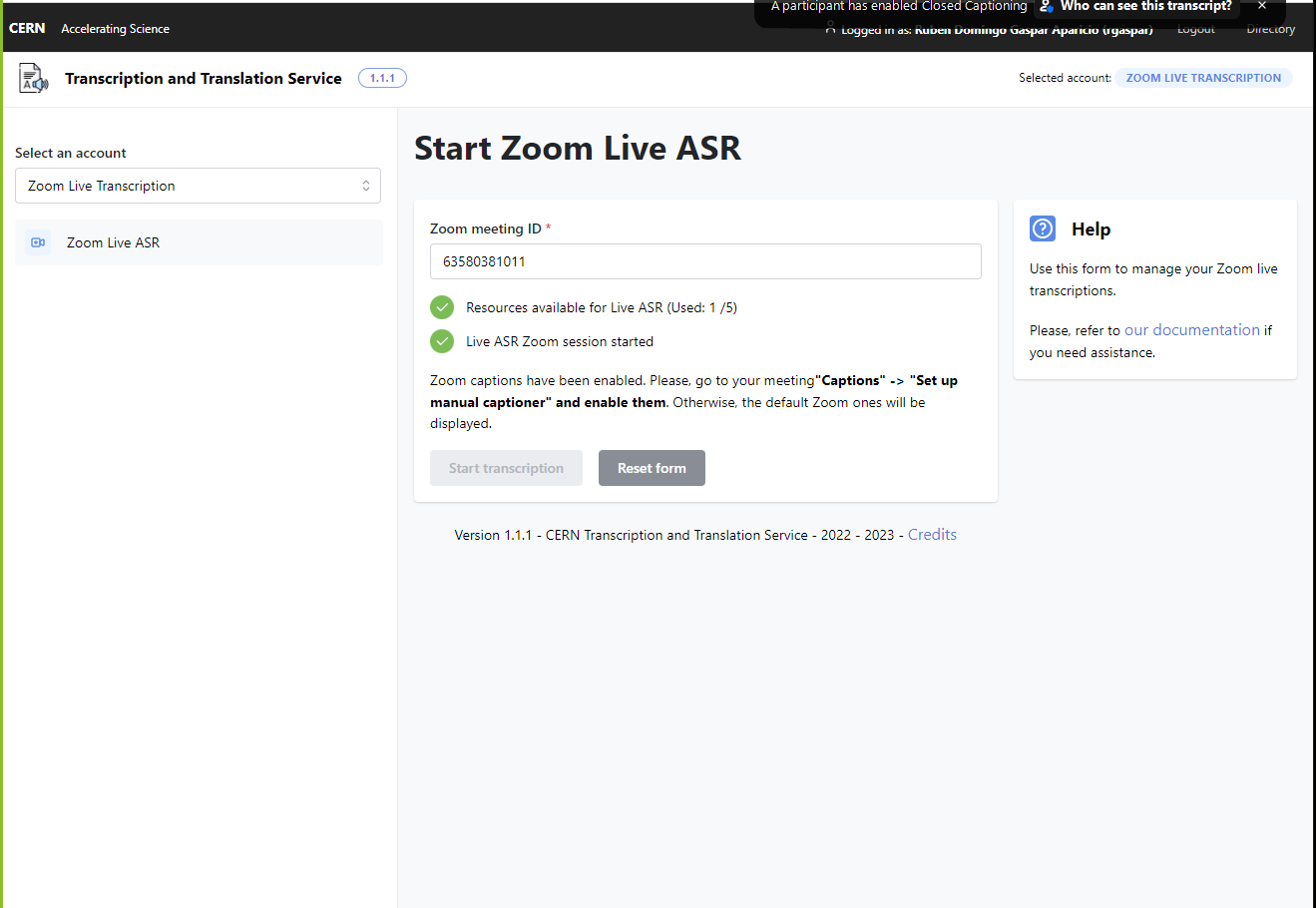

You just need to provide the zoomid of your Zoom meeting and click on start transcription. If all goes well, you are already connected to CERN ASR system and your audio is reaching CERN ASR via RTMP protocol:

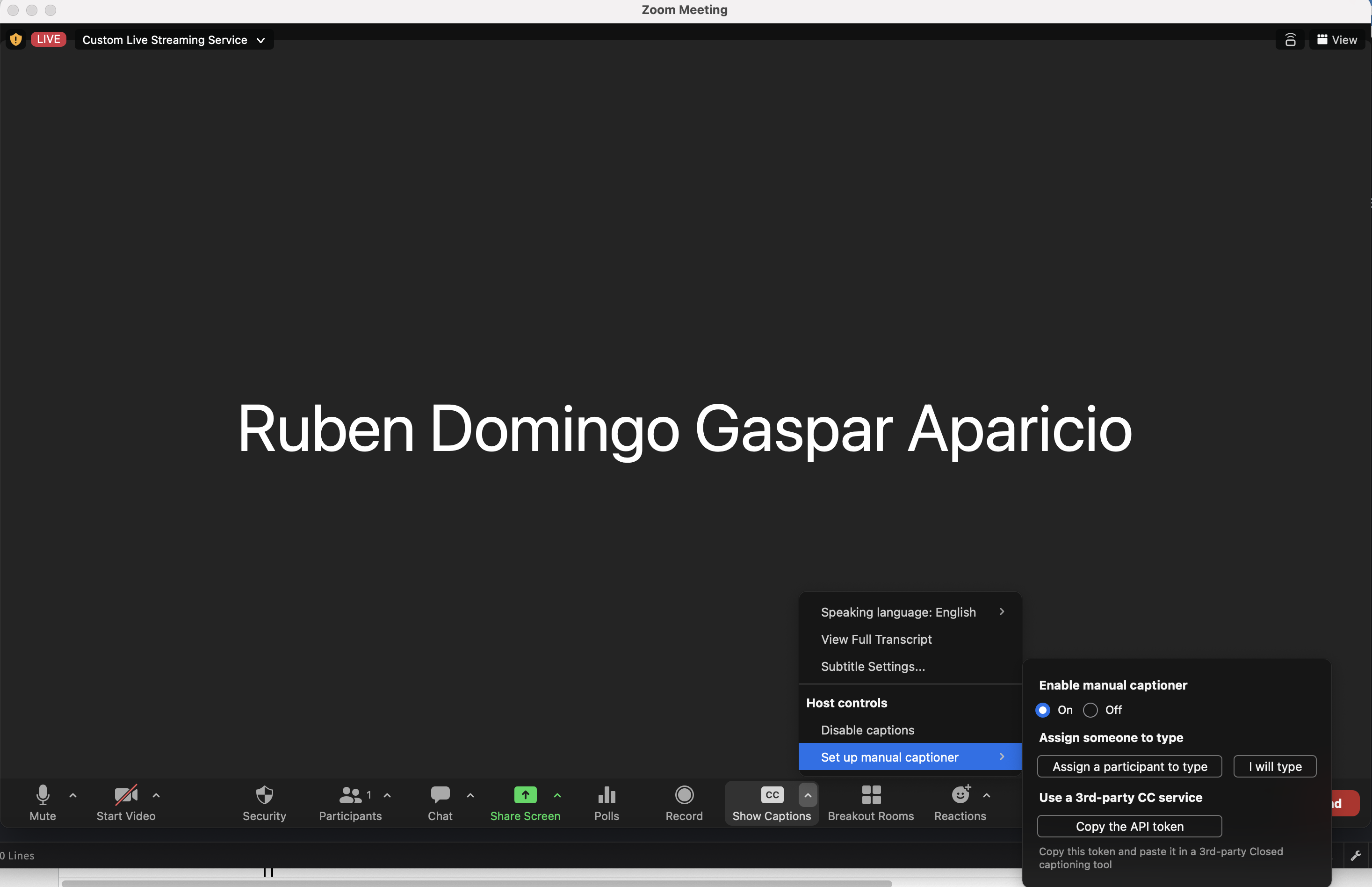

To visualize captions on your Zoom desktop client you just need to select to go to Show Captions and then Set up manual captioner and set it to On.

Once this is done, captions coming from CERN ASR will be inserted within Zoom app:

The advantages of this system approach:

- High integration on the videoconference app, which allows user to select if they want to see it or not

- High quality captions, as CERN models are adapted to in-domain jargon.

- Zoom app can be used in different ways to adapt the CC balloon so it can be display on a big screen in a conference e.g. LHCP 2023.

The disadvantage is the latency, about 4 secs, due to the Zoom REST API iteraction.

WS Live ASR¶

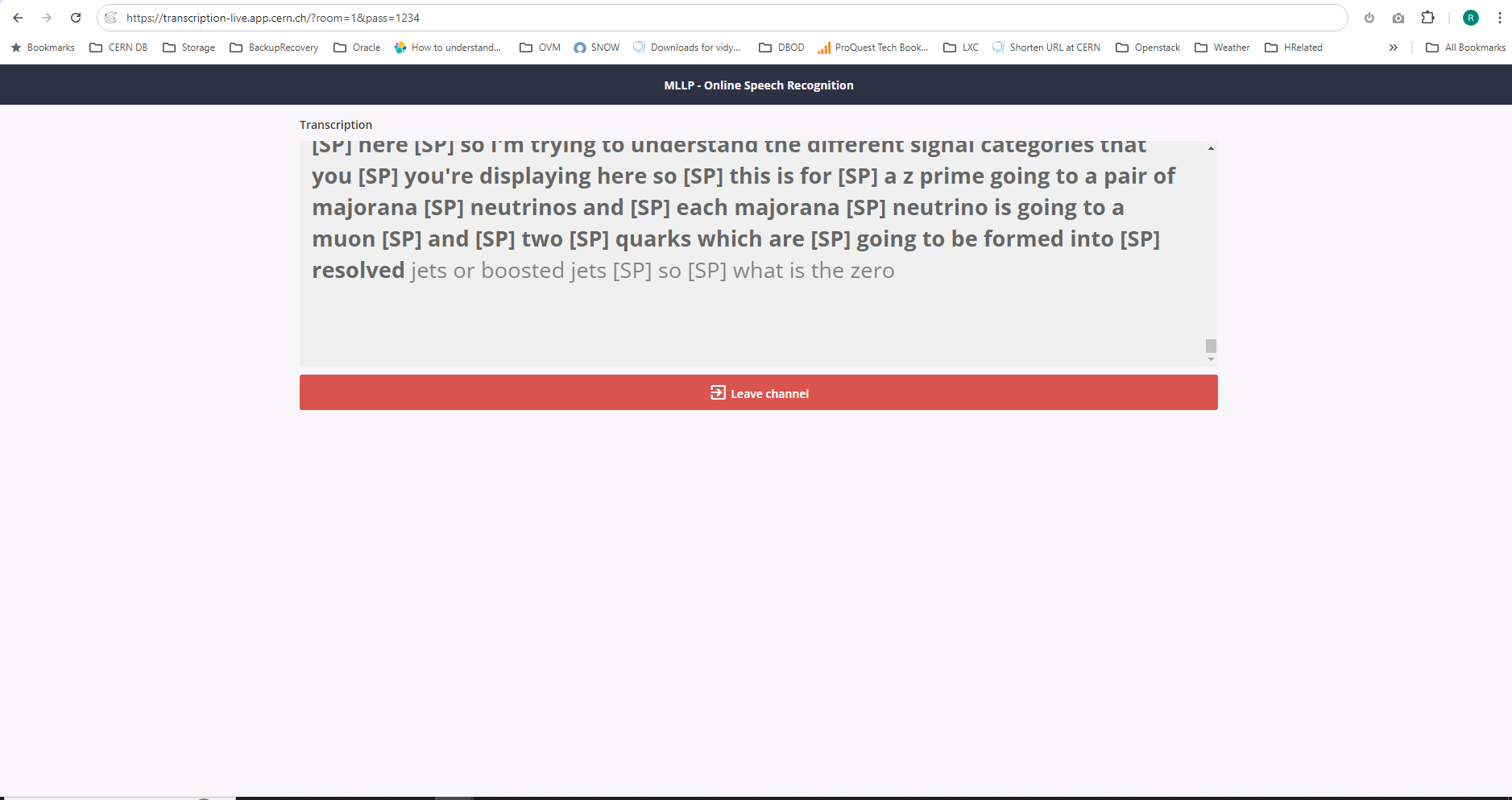

In order to reduce latency and to be independent of the videoconference client, CERN ASR service developed a way to visualize captions of an event via Web Sockets. Using a browser in any platform users can connect to a room with a password so they can visualize transcripts on that audio. The approach is plaform and device agnostic as long as a browser is available. It scales in case many users would attend. And most importantly, latency is below 1 second.

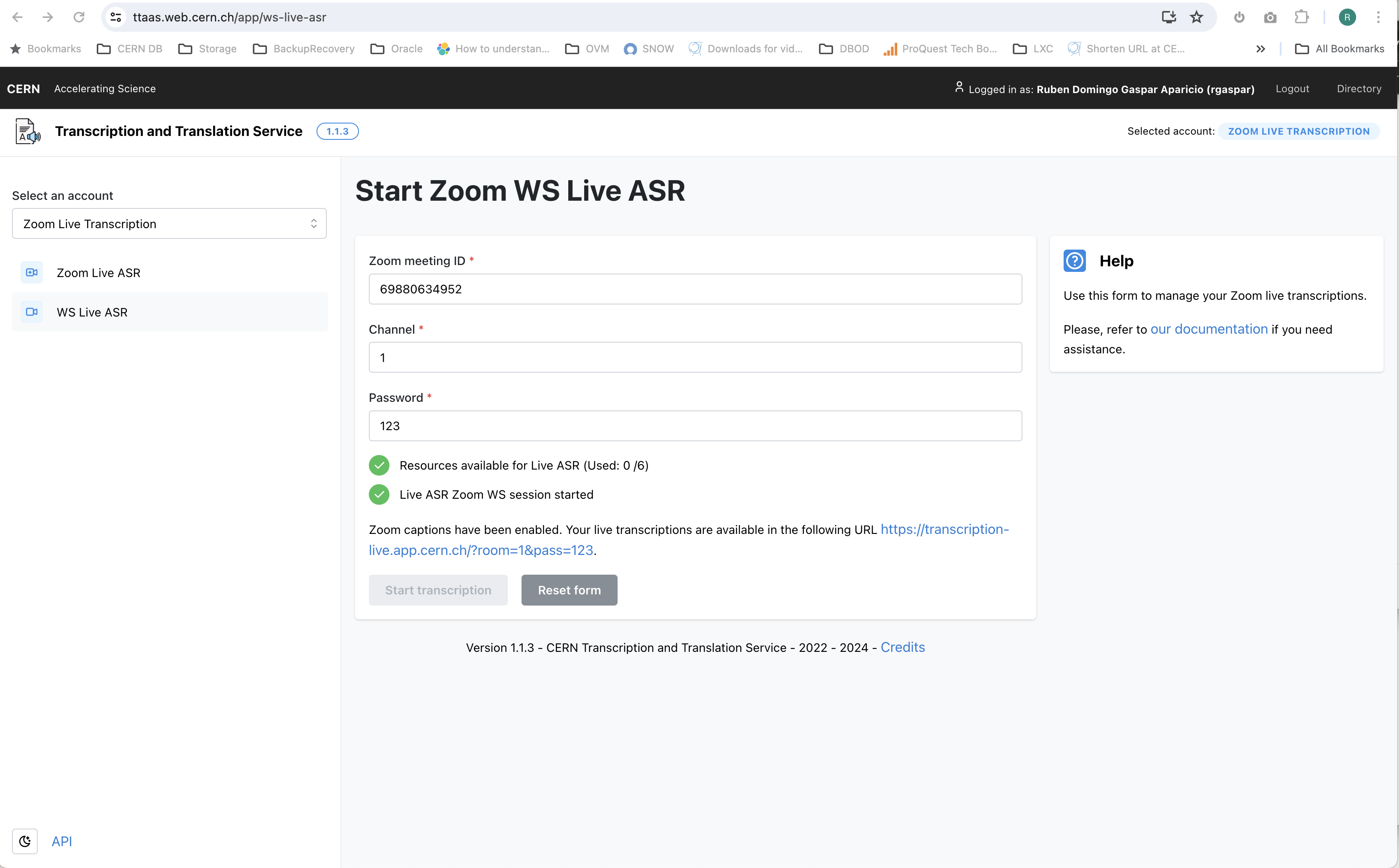

Once you have selected the WS Live ASR:

You will need as usual a zoomid:

Once connected you will be able to watch captions at the URL indicated:

Just to note that SP means silent pause. It's detected by the engine and in general it could be replaced in case by: music, applause, etc. which will make the transcription more friendly for impaired persons.

Latest architecture¶

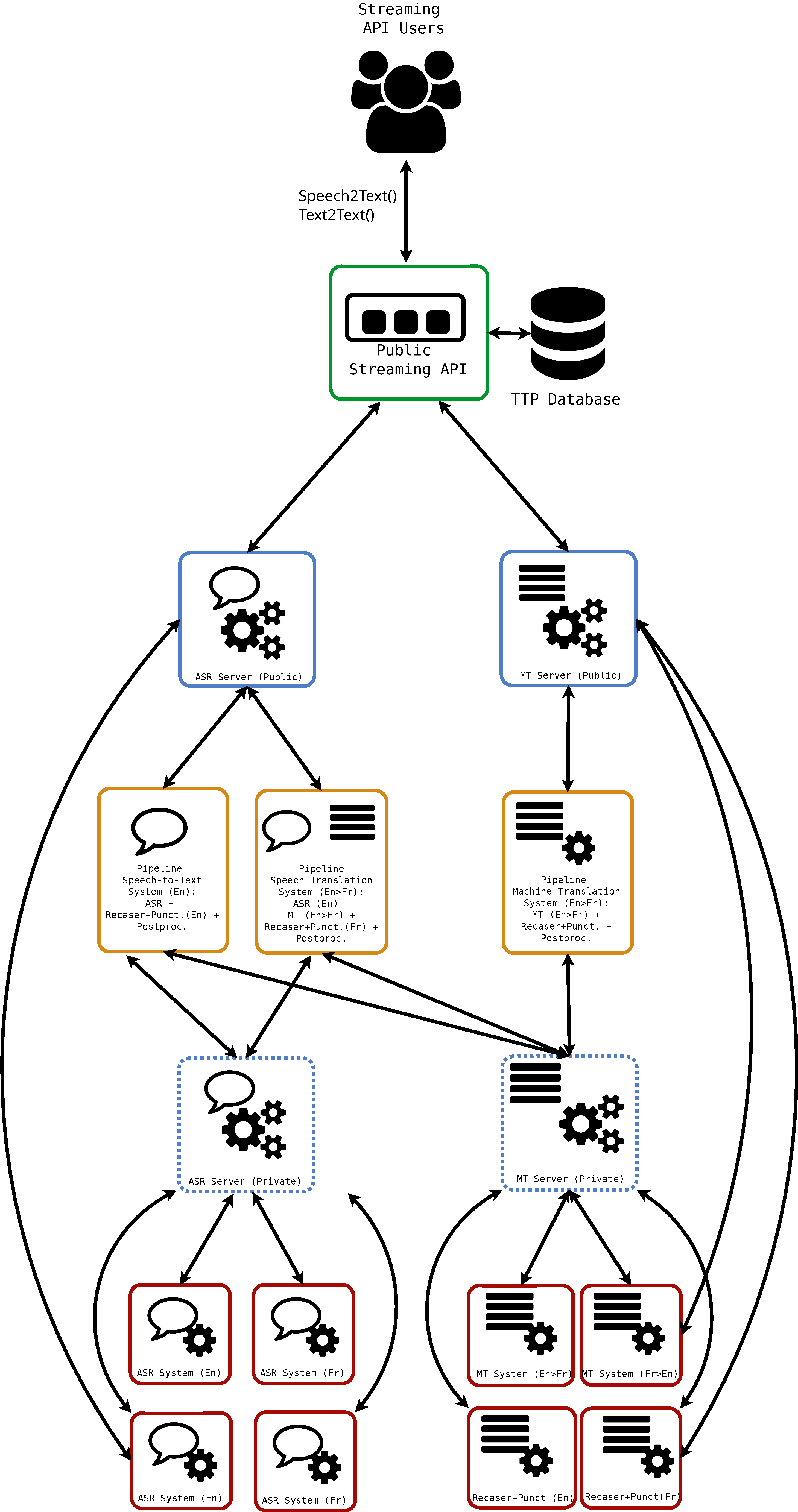

Following the input of the user community, the TTaaS pilot service together with MLLP was evolving into this architecture:

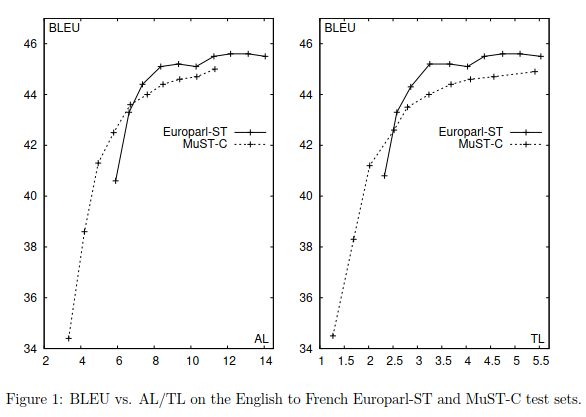

Using the same CERN models, the new architecture was intended to provide punctuation and translation (En -> Fr), with the same quality it's offered for the offline use case. The BLEU > 40 as requested on the project is achieved with latency of about 2 seconds and average lagging of 6 words:

Further details can be found at D3.6: Final development, deployment and testing of the Solution.

This work was stopped by the resolution of IT management: RCS-ICT Steering committee Metting - Summary to stop the CERN Live ASR.